Firecrawl

🔥 Turn entire websites into LLM-ready markdown or structured data. Scrape, crawl and extract with a single API. - xiyuefox/firecrawl

README

<h3 align="center"> <a name="readme-top"></a> <img src="https://raw.githubusercontent.com/mendableai/firecrawl/main/img/firecrawl_logo.png" height="200"

</h3> <div align="center"> <a href="https://github.com/mendableai/firecrawl/blob/main/LICENSE"> <img src="https://img.shields.io/github/license/mendableai/firecrawl" alt="License"> </a> <a href="https://pepy.tech/project/firecrawl-py"> <img src="https://static.pepy.tech/badge/firecrawl-py" alt="Downloads"> </a> <a href="https://GitHub.com/mendableai/firecrawl/graphs/contributors"> <img src="https://img.shields.io/github/contributors/mendableai/firecrawl.svg" alt="GitHub Contributors"> </a> <a href="https://firecrawl.dev"> <img src="https://img.shields.io/badge/Visit-firecrawl.dev-orange" alt="Visit firecrawl.dev"> </a> </div> <div> <p align="center"> <a href="https://twitter.com/firecrawl_dev"> <img src="https://img.shields.io/badge/Follow%20on%20X-000000?style=for-the-badge&logo=x&logoColor=white" alt="Follow on X" /> </a> <a href="https://www.linkedin.com/company/104100957"> <img src="https://img.shields.io/badge/Follow%20on%20LinkedIn-0077B5?style=for-the-badge&logo=linkedin&logoColor=white" alt="Follow on LinkedIn" /> </a> <a href="https://discord.com/invite/gSmWdAkdwd"> <img src="https://img.shields.io/badge/Join%20our%20Discord-5865F2?style=for-the-badge&logo=discord&logoColor=white" alt="Join our Discord" /> </a> </p> </div>

🔥 Firecrawl

Empower your AI apps with clean data from any website. Featuring advanced scraping, crawling, and data extraction capabilities.

This repository is in development, and we’re still integrating custom modules into the mono repo. It's not fully ready for self-hosted deployment yet, but you can run it locally.

What is Firecrawl?

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown or structured data. We crawl all accessible subpages and give you clean data for each. No sitemap required. Check out our documentation.

Pst. hey, you, join our stargazers :)

<a href="https://github.com/mendableai/firecrawl"> <img src="https://img.shields.io/github/stars/mendableai/firecrawl.svg?style=social&label=Star&maxAge=2592000" alt="GitHub stars"> </a>

How to use it?

We provide an easy to use API with our hosted version. You can find the playground and documentation here. You can also self host the backend if you'd like.

Check out the following resources to get started:

- [x] API: Documentation

- [x] SDKs: Python, Node, Go, Rust

- [x] LLM Frameworks: Langchain (python), Langchain (js), Llama Index, Crew.ai, Composio, PraisonAI, Superinterface, Vectorize

- [x] Low-code Frameworks: Dify, Langflow, Flowise AI, Cargo, Pipedream

- [x] Others: Zapier, Pabbly Connect

- [ ] Want an SDK or Integration? Let us know by opening an issue.

To run locally, refer to guide here.

API Key

To use the API, you need to sign up on Firecrawl and get an API key.

Features

- Scrape: scrapes a URL and get its content in LLM-ready format (markdown, structured data via LLM Extract, screenshot, html)

- Crawl: scrapes all the URLs of a web page and return content in LLM-ready format

- Map: input a website and get all the website urls - extremely fast

- Extract: get structured data from single page, multiple pages or entire websites with AI.

Powerful Capabilities

- LLM-ready formats: markdown, structured data, screenshot, HTML, links, metadata

- The hard stuff: proxies, anti-bot mechanisms, dynamic content (js-rendered), output parsing, orchestration

- Customizability: exclude tags, crawl behind auth walls with custom headers, max crawl depth, etc...

- Media parsing: pdfs, docx, images

- Reliability first: designed to get the data you need - no matter how hard it is

- Actions: click, scroll, input, wait and more before extracting data

- Batching (New): scrape thousands of URLs at the same time with a new async endpoint.

You can find all of Firecrawl's capabilities and how to use them in our documentation

Crawling

Used to crawl a URL and all accessible subpages. This submits a crawl job and returns a job ID to check the status of the crawl.

curl -X POST https://api.firecrawl.dev/v1/crawl \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer fc-YOUR_API_KEY' \

-d '{

"url": "https://docs.firecrawl.dev",

"limit": 10,

"scrapeOptions": {

"formats": ["markdown", "html"]

}

}'

Returns a crawl job id and the url to check the status of the crawl.

{

"success": true,

"id": "123-456-789",

"url": "https://api.firecrawl.dev/v1/crawl/123-456-789"

}

Check Crawl Job

Used to check the status of a crawl job and get its result.

curl -X GET https://api.firecrawl.dev/v1/crawl/123-456-789 \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY'

{

"status": "completed",

"total": 36,

"creditsUsed": 36,

"expiresAt": "2024-00-00T00:00:00.000Z",

"data": [

{

"markdown": "[Firecrawl Docs home page!...",

"html": "<!DOCTYPE html><html lang=\"en\" class=\"js-focus-visible lg:[--scroll-mt:9.5rem]\" data-js-focus-visible=\"\">...",

"metadata": {

"title": "Build a 'Chat with website' using Groq Llama 3 | Firecrawl",

"language": "en",

"sourceURL": "https://docs.firecrawl.dev/learn/rag-llama3",

"description": "Learn how to use Firecrawl, Groq Llama 3, and Langchain to build a 'Chat with your website' bot.",

"ogLocaleAlternate": [],

"statusCode": 200

}

}

]

}

Scraping

Used to scrape a URL and get its content in the specified formats.

curl -X POST https://api.firecrawl.dev/v1/scrape \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"url": "https://docs.firecrawl.dev",

"formats" : ["markdown", "html"]

}'

Response:

{

"success": true,

"data": {

"markdown": "Launch Week I is here! [See our Day 2 Release 🚀](https://www.firecrawl.dev/blog/launch-week-i-day-2-doubled-rate-limits)[💥 Get 2 months free...",

"html": "<!DOCTYPE html><html lang=\"en\" class=\"light\" style=\"color-scheme: light;\"><body class=\"__variable_36bd41 __variable_d7dc5d font-inter ...",

"metadata": {

"title": "Home - Firecrawl",

"description": "Firecrawl crawls and converts any website into clean markdown.",

"language": "en",

"keywords": "Firecrawl,Markdown,Data,Mendable,Langchain",

"robots": "follow, index",

"ogTitle": "Firecrawl",

"ogDescription": "Turn any website into LLM-ready data.",

"ogUrl": "https://www.firecrawl.dev/",

"ogImage": "https://www.firecrawl.dev/og.png?123",

"ogLocaleAlternate": [],

"ogSiteName": "Firecrawl",

"sourceURL": "https://firecrawl.dev",

"statusCode": 200

}

}

}

Map (Alpha)

Used to map a URL and get urls of the website. This returns most links present on the website.

curl -X POST https://api.firecrawl.dev/v1/map \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"url": "https://firecrawl.dev"

}'

Response:

{

"status": "success",

"links": [

"https://firecrawl.dev",

"https://www.firecrawl.dev/pricing",

"https://www.firecrawl.dev/blog",

"https://www.firecrawl.dev/playground",

"https://www.firecrawl.dev/smart-crawl",

]

}

Map with search

Map with search param allows you to search for specific urls inside a website.

curl -X POST https://api.firecrawl.dev/v1/map \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"url": "https://firecrawl.dev",

"search": "docs"

}'

Response will be an ordered list from the most relevant to the least relevant.

{

"status": "success",

"links": [

"https://docs.firecrawl.dev",

"https://docs.firecrawl.dev/sdks/python",

"https://docs.firecrawl.dev/learn/rag-llama3",

]

}

Extract

Get structured data from entire websites with a prompt and/or a schema.

You can extract structured data from one or multiple URLs, including wildcards:

Single Page: Example: https://firecrawl.dev/some-page

Multiple Pages / Full Domain Example: https://firecrawl.dev/*

When you use /*, Firecrawl will automatically crawl and parse all URLs it can discover in that domain, then extract the requested data.

curl -X POST https://api.firecrawl.dev/v1/extract \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"urls": [

"https://firecrawl.dev/*",

"https://docs.firecrawl.dev/",

"https://www.ycombinator.com/companies"

],

"prompt": "Extract the company mission, whether it is open source, and whether it is in Y Combinator from the page.",

"schema": {

"type": "object",

"properties": {

"company_mission": {

"type": "string"

},

"is_open_source": {

"type": "boolean"

},

"is_in_yc": {

"type": "boolean"

}

},

"required": [

"company_mission",

"is_open_source",

"is_in_yc"

]

}

}'

{

"success": true,

"id": "44aa536d-f1cb-4706-ab87-ed0386685740",

"urlTrace": []

}

If you are using the sdks, it will auto pull the response for you:

{

"success": true,

"data": {

"company_mission": "Firecrawl is the easiest way to extract data from the web. Developers use us to reliably convert URLs into LLM-ready markdown or structured data with a single API call.",

"supports_sso": false,

"is_open_source": true,

"is_in_yc": true

}

}

LLM Extraction (Beta)

Used to extract structured data from scraped pages.

curl -X POST https://api.firecrawl.dev/v1/scrape \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"url": "https://www.mendable.ai/",

"formats": ["json"],

"jsonOptions": {

"schema": {

"type": "object",

"properties": {

"company_mission": {

"type": "string"

},

"supports_sso": {

"type": "boolean"

},

"is_open_source": {

"type": "boolean"

},

"is_in_yc": {

"type": "boolean"

}

},

"required": [

"company_mission",

"supports_sso",

"is_open_source",

"is_in_yc"

]

}

}

}'

{

"success": true,

"data": {

"content": "Raw Content",

"metadata": {

"title": "Mendable",

"description": "Mendable allows you to easily build AI chat applications. Ingest, customize, then deploy with one line of code anywhere you want. Brought to you by SideGuide",

"robots": "follow, index",

"ogTitle": "Mendable",

"ogDescription": "Mendable allows you to easily build AI chat applications. Ingest, customize, then deploy with one line of code anywhere you want. Brought to you by SideGuide",

"ogUrl": "https://mendable.ai/",

"ogImage": "https://mendable.ai/mendable_new_og1.png",

"ogLocaleAlternate": [],

"ogSiteName": "Mendable",

"sourceURL": "https://mendable.ai/"

},

"json": {

"company_mission": "Train a secure AI on your technical resources that answers customer and employee questions so your team doesn't have to",

"supports_sso": true,

"is_open_source": false,

"is_in_yc": true

}

}

}

Extracting without a schema (New)

You can now extract without a schema by just passing a prompt to the endpoint. The llm chooses the structure of the data.

curl -X POST https://api.firecrawl.dev/v1/scrape \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"url": "https://docs.firecrawl.dev/",

"formats": ["json"],

"jsonOptions": {

"prompt": "Extract the company mission from the page."

}

}'

Interacting with the page with Actions (Cloud-only)

Firecrawl allows you to perform various actions on a web page before scraping its content. This is particularly useful for interacting with dynamic content, navigating through pages, or accessing content that requires user interaction.

Here is an example of how to use actions to navigate to google.com, search for Firecrawl, click on the first result, and take a screenshot.

curl -X POST https://api.firecrawl.dev/v1/scrape \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"url": "google.com",

"formats": ["markdown"],

"actions": [

{"type": "wait", "milliseconds": 2000},

{"type": "click", "selector": "textarea[title=\"Search\"]"},

{"type": "wait", "milliseconds": 2000},

{"type": "write", "text": "firecrawl"},

{"type": "wait", "milliseconds": 2000},

{"type": "press", "key": "ENTER"},

{"type": "wait", "milliseconds": 3000},

{"type": "click", "selector": "h3"},

{"type": "wait", "milliseconds": 3000},

{"type": "screenshot"}

]

}'

Batch Scraping Multiple URLs (New)

You can now batch scrape multiple URLs at the same time. It is very similar to how the /crawl endpoint works. It submits a batch scrape job and returns a job ID to check the status of the batch scrape.

curl -X POST https://api.firecrawl.dev/v1/batch/scrape \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"urls": ["https://docs.firecrawl.dev", "https://docs.firecrawl.dev/sdks/overview"],

"formats" : ["markdown", "html"]

}'

Search

The search endpoint combines web search with Firecrawl’s scraping capabilities to return full page content for any query.

Include scrapeOptions with formats: ["markdown"] to get complete markdown content for each search result otherwise it defaults to getting SERP results (url, title, description).

curl -X POST https://api.firecrawl.dev/v1/search \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY' \

-d '{

"query": "What is Mendable?"

}'

{

"success": true,

"data": [

{

"url": "https://mendable.ai",

"title": "Mendable | AI for CX and Sales",

"description": "AI for CX and Sales"

}

]

}

Using Python SDK

Installing Python SDK

pip install firecrawl-py

Crawl a website

from firecrawl.firecrawl import FirecrawlApp

app = FirecrawlApp(api_key="fc-YOUR_API_KEY")

# Scrape a website:

scrape_status = app.scrape_url(

'https://firecrawl.dev',

params={'formats': ['markdown', 'html']}

)

print(scrape_status)

# Crawl a website:

crawl_status = app.crawl_url(

'https://firecrawl.dev',

params={

'limit': 100,

'scrapeOptions': {'formats': ['markdown', 'html']}

},

poll_interval=30

)

print(crawl_status)

Extracting structured data from a URL

With LLM extraction, you can easily extract structured data from any URL. We support pydantic schemas to make it easier for you too. Here is how you to use it:

from firecrawl.firecrawl import FirecrawlApp

app = FirecrawlApp(api_key="fc-YOUR_API_KEY")

class ArticleSchema(BaseModel):

title: str

points: int

by: str

commentsURL: str

class TopArticlesSchema(BaseModel):

top: List[ArticleSchema] = Field(..., max_items=5, description="Top 5 stories")

data = app.scrape_url('https://news.ycombinator.com', {

'formats': ['json'],

'jsonOptions': {

'schema': TopArticlesSchema.model_json_schema()

}

})

print(data["json"])

Using the Node SDK

Installation

To install the Firecrawl Node SDK, you can use npm:

npm install @mendable/firecrawl-js

Usage

- Get an API key from firecrawl.dev

- Set the API key as an environment variable named

FIRECRAWL_API_KEYor pass it as a parameter to theFirecrawlAppclass.

import FirecrawlApp, { CrawlParams, CrawlStatusResponse } from '@mendable/firecrawl-js';

const app = new FirecrawlApp({apiKey: "fc-YOUR_API_KEY"});

// Scrape a website

const scrapeResponse = await app.scrapeUrl('https://firecrawl.dev', {

formats: ['markdown', 'html'],

});

if (scrapeResponse) {

console.log(scrapeResponse)

}

// Crawl a website

const crawlResponse = await app.crawlUrl('https://firecrawl.dev', {

limit: 100,

scrapeOptions: {

formats: ['markdown', 'html'],

}

} satisfies CrawlParams, true, 30) satisfies CrawlStatusResponse;

if (crawlResponse) {

console.log(crawlResponse)

}

Extracting structured data from a URL

With LLM extraction, you can easily extract structured data from any URL. We support zod schema to make it easier for you too. Here is how to use it:

import FirecrawlApp from "@mendable/firecrawl-js";

import { z } from "zod";

const app = new FirecrawlApp({

apiKey: "fc-YOUR_API_KEY"

});

// Define schema to extract contents into

const schema = z.object({

top: z

.array(

z.object({

title: z.string(),

points: z.number(),

by: z.string(),

commentsURL: z.string(),

})

)

.length(5)

.describe("Top 5 stories on Hacker News"),

});

const scrapeResult = await app.scrapeUrl("https://news.ycombinator.com", {

jsonOptions: { extractionSchema: schema },

});

console.log(scrapeResult.data["json"]);

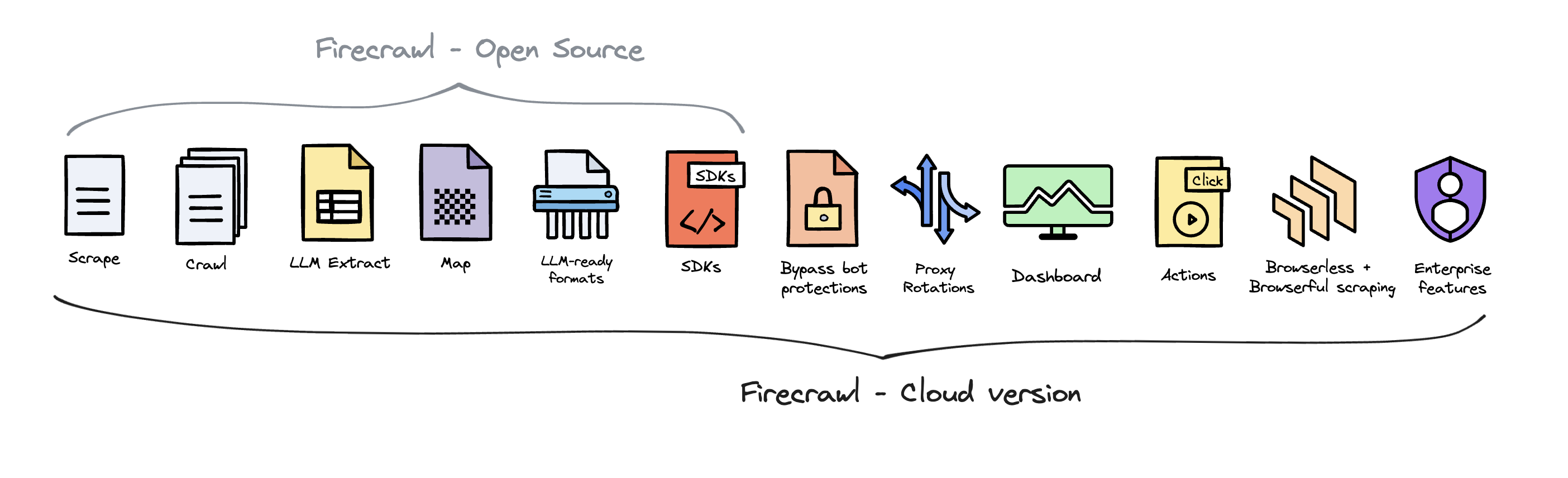

Open Source vs Cloud Offering

Firecrawl is open source available under the AGPL-3.0 license.

To deliver the best possible product, we offer a hosted version of Firecrawl alongside our open-source offering. The cloud solution allows us to continuously innovate and maintain a high-quality, sustainable service for all users.

Firecrawl Cloud is available at firecrawl.dev and offers a range of features that are not available in the open source version:

Contributing

We love contributions! Please read our contributing guide before submitting a pull request. If you'd like to self-host, refer to the self-hosting guide.

It is the sole responsibility of the end users to respect websites' policies when scraping, searching and crawling with Firecrawl. Users are advised to adhere to the applicable privacy policies and terms of use of the websites prior to initiating any scraping activities. By default, Firecrawl respects the directives specified in the websites' robots.txt files when crawling. By utilizing Firecrawl, you expressly agree to comply with these conditions.

Contributors

<a href="https://github.com/mendableai/firecrawl/graphs/contributors"> <img alt="contributors" src="https://contrib.rocks/image?repo=mendableai/firecrawl"/> </a>

License Disclaimer

This project is primarily licensed under the GNU Affero General Public License v3.0 (AGPL-3.0), as specified in the LICENSE file in the root directory of this repository. However, certain components of this project are licensed under the MIT License. Refer to the LICENSE files in these specific directories for details.

Please note:

- The AGPL-3.0 license applies to all parts of the project unless otherwise specified.

- The SDKs and some UI components are licensed under the MIT License. Refer to the LICENSE files in these specific directories for details.

- When using or contributing to this project, ensure you comply with the appropriate license terms for the specific component you are working with.

For more details on the licensing of specific components, please refer to the LICENSE files in the respective directories or contact the project maintainers.

<p align="right" style="font-size: 14px; color: #555; margin-top: 20px;"> <a href="#readme-top" style="text-decoration: none; color: #007bff; font-weight: bold;"> ↑ Back to Top ↑ </a> </p>

推荐服务器

Neon MCP Server

用于与 Neon 管理 API 和数据库交互的 MCP 服务器

Exa MCP Server

模型上下文协议(MCP)服务器允许像 Claude 这样的 AI 助手使用 Exa AI 搜索 API 进行网络搜索。这种设置允许 AI 模型以安全和受控的方式获取实时的网络信息。

mult-fetch-mcp-server

一个多功能的、符合 MCP 规范的网页内容抓取工具,支持多种模式(浏览器/Node)、格式(HTML/JSON/Markdown/文本)和智能代理检测,并提供双语界面(英语/中文)。

AIO-MCP Server

🚀 集成了 AI 搜索、RAG 和多服务(GitLab/Jira/Confluence/YouTube)的一体化 MCP 服务器,旨在增强 AI 驱动的开发工作流程。来自 Folk。

Knowledge Graph Memory Server

为 Claude 实现持久性记忆,使用本地知识图谱,允许 AI 记住用户的信息,并可在自定义位置存储,跨对话保持记忆。

Hyperbrowser

欢迎来到 Hyperbrowser,人工智能的互联网。Hyperbrowser 是下一代平台,旨在增强人工智能代理的能力,并实现轻松、可扩展的浏览器自动化。它专为人工智能开发者打造,消除了本地基础设施和性能瓶颈带来的麻烦,让您能够:

any-chat-completions-mcp

将 Claude 与任何 OpenAI SDK 兼容的聊天完成 API 集成 - OpenAI、Perplexity、Groq、xAI、PyroPrompts 等。

Exa MCP Server

一个模型上下文协议服务器,它使像 Claude 这样的人工智能助手能够以安全和受控的方式,使用 Exa AI 搜索 API 执行实时网络搜索。

BigQuery MCP Server

这是一个服务器,可以让你的大型语言模型(LLM,比如Claude)直接与你的BigQuery数据对话!可以把它想象成一个友好的翻译器,它位于你的AI助手和数据库之间,确保它们可以安全高效地进行交流。

MCP Web Research Server

一个模型上下文协议服务器,使 Claude 能够通过集成 Google 搜索、提取网页内容和捕获屏幕截图来进行网络研究。